We are happy to announce that we have founded a new startup called DeepUp. DeepUp is EXIST-funded ad develops novel solutions for geolocation and intelligent data processing.

Author: stachnis

2019-07: Code Available: Bonnetal – an easy-to-use deep-learning training and deployment pipeline for robotics by Andres Milioto

We have recently open-sourced Bonnetal, an easy-to-use deep-learning training and deployment pipeline to do a suite of perception tasks, that we have developed for our robots’ perception systems.

Bonnetal can pre-train popular CNN backbones on ImageNet for transfer learning (popular model trained weights are downloaded by default from our server so the learning never happens from scratch) and it has fast decoders for real-time semantic segmentation. We have more applications in the internal pipeline that we will be open-sourcing within the framework as well, such as object detection, instance segmentation, keypoint/feature extraction, and more.

The key features of Bonnetal are:

- The training interface is easy to use, even for a novice in machine learning,

- The library of models for transfer learning requires significantly less training data and time for a new task and dataset, exploiting the knowledge that is already condensed in the pre-trained weights about low-level geometry and texture,

- All architectures can be used with our C++ library, which also has a ROS wrapper so that you don’t have to code at all, and

- All of the supported architectures are tested using NVIDIA’s TensorRT so that you can get that extra juice out of your Jetson or GPU, including fast inference tricks such as INT8 quantization and calibration (vs. standard, slower, floating point 32).

This video (https://youtu.be/C7rUycC5Rts) shows a person-vs-background segmentation network using a MobilenetsV2 architecture with a small Atrous Spatial Pyramid pooling module, running quantized to INT8 for fast inference, achieving 200FPS at VGA resolution on a single GPU.

Access to the code in our Lab’s GitHub: https://github.com/PRBonn/bonnetal

2019-05: Code Available: ReFusion: 3D Reconstruction in Dynamic Environments for RGB-D Cameras by Emanuele Palazzolo

ReFusion – 3D Reconstruction in Dynamic Environments for RGB-D Cameras Exploiting Residuals

Mapping and localization are essential capabilities of robotic systems. Although the majority of mapping systems focus on static environments, the deployment in real-world situations requires them to handle dynamic objects. In this paper, we propose an approach for an RGB-D sensor that is able to consistently map scenes containing multiple dynamic elements. For localization and mapping, we employ an efficient direct tracking on the truncated signed distance function (TSDF) and leverage color information encoded in the TSDF to estimate the pose of the sensor. The TSDF is efficiently represented using voxel hashing, with most computations parallelized on a GPU. For detecting dynamics, we exploit the residuals obtained after an initial registration, together with the explicit modeling of free space in the model. We evaluate our approach on existing datasets, and provide a new dataset showing highly dynamic scenes. These experiments show that our approach often surpass other state-of-the-art dense SLAM methods. We make available our dataset with the ground truth for both the trajectory of the RGB-D sensor obtained by a motion capture system and the model of the static environment using a high-precision terrestrial laser scanner.

If you use our implementation in your academic work, please cite the corresponding paper: E. Palazzolo, J. Behley, P. Lottes, P. Giguère, C. Stachniss. ReFusion: 3D Reconstruction in Dynamic Environments for RGB-D Cameras Exploiting Residuals, Submitted to IROS, 2019 (arxiv paper).

This code is related to the following publications:

E. Palazzolo, J. Behley, P. Lottes, P. Giguère, C. Stachniss. ReFusion: 3D Reconstruction in Dynamic Environments for RGB-D Cameras Exploiting Residuals, Submitted to IROS, 2019 (arxiv paper).

2019-03-11: Cyrill Stachniss receives AMiner TOP 10 Most Influential Scholar Award (2007-2017)

Cyrill Stachniss received the 2018 AMiner TOP 10 Most Influential Scholar Award (2007-2017) in the area of robotics. The AMiner Most Influential Scholar Annual List names the world’s top-cited research scholars from the fields of AI/robotics. The list is conferred in recognition of outstanding technical achievements with lasting contribution and impact to the research community. In 2018, the winners are among the most-cited scholars whose paper was published in the top venues of their respective subject fields between 2007 and 2017. Recipients are automatically determined by a computer algorithm deployed in the AMiner system that tracks and ranks scholars based on citation counts collected by top-venue publications. In specific, the list of the field Robot answers the question of between 2007 and 2017, who are the most cited scholars in ICRA and IROS conferences, which are identified as the top venues of this field.

2019-01-17: New Investment in Escarda Technologies GmbH

Our startup Escarda Technologies GmbH received funding for the next five year from the Scansonic Holding, Berlin, which is now the third partner of Escarda Technologies GmbH. We are thrilled to push our new endeavor of fully automated, laser-based weeding forward.

2018-12: Christan Merfers and Kaihong Huang defended their PhD Theses

Christian Merfels and Kaihong Huang both have successfully defended their Ph.D. theses.

2018-12: Code Available: Release Surfel-based Mapping using 3D Laser Range Data by Jens Behley

SuMa – Surfel-based Mapping using 3D Laser Range Data

Mapping of 3d laser range data from a rotating laser range scanner, e.g., the Velodyne HDL-64E. For representing the map, we use surfels that enables fast rendering of the map for point-to-plane ICP and loop closure detection. If you use our implementation in your academic work, please cite the corresponding paper: J. Behley, C. Stachniss. Efficient Surfel-Based SLAM using 3D Laser Range Data in Urban Environments, Proc. of Robotics: Science and Systems (RSS), 2018 (pdf).

This code is related to the following publications:

J. Behley, C. Stachniss. Efficient Surfel-Based SLAM using 3D Laser Range Data in Urban Environments, Proc. of Robotics: Science and Systems (RSS), 2018 (pdf).

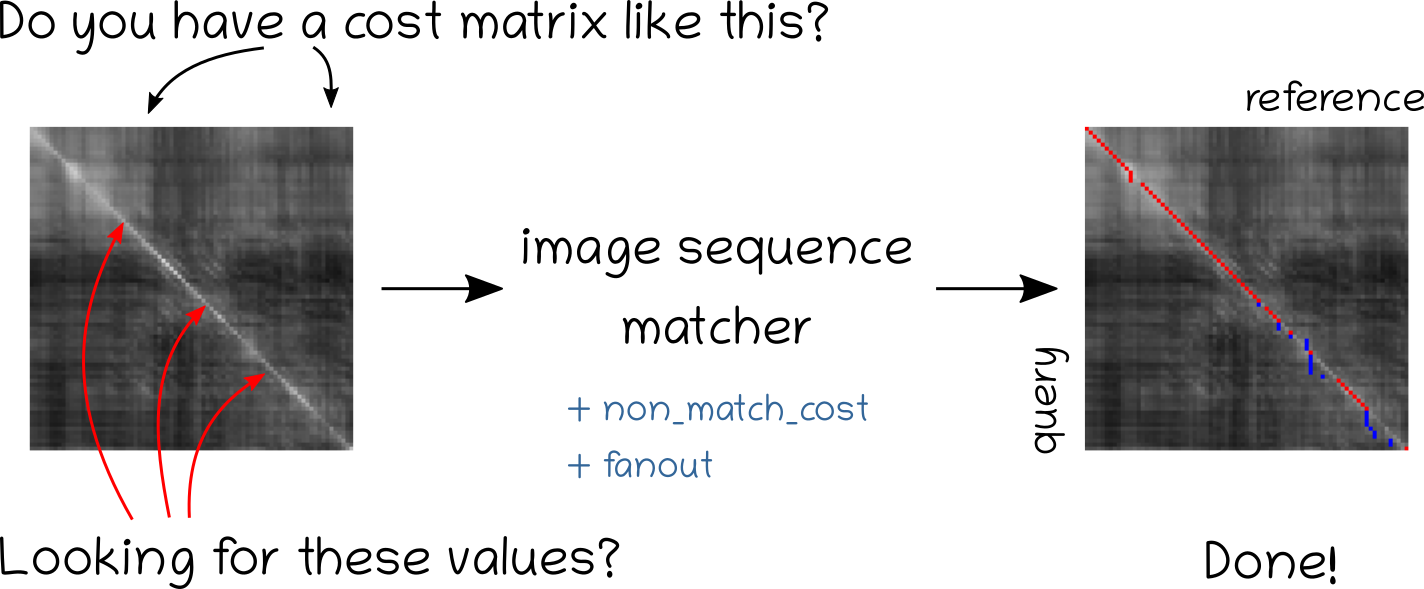

2018-12: Code Available: Release for offline image sequence matching baseline by Olga Vysotska

Image sequence matcher

Image sequence matcher on github

Finds the image matches between two sequences of images given a similarity (cost) matrix. Particularly suited for image sequences matching in the context of visual place recognition in changing environments.

2018-11: Igor Bogoslavskyi defended his PhD Thesis

Igor Bogoslavskyi successfully defended his PhD thesis entitled “Robot mapping and navigation in real-world environments” at the University of Bonn on the Photogrammetry & Robotics Lab.

Download the PhD thesis

Robots can perform various tasks, such as mapping hazardous sites, taking part in search-and-rescue scenarios, or delivering goods and people. Robots operating in the real world face many challenges on the way to the completion of their mission. Essential capabilities required for the operation of such robots are mapping, localization and navigation. Solving all of these tasks robustly presents a substantial di culty as these components are usually interconnected, i.e., a robot that starts without any knowledge about the environment must simultaneously build a map, localize itself in it, analyze the surroundings and plan a path to efficiently explore an unknown environment. In addition to the interconnections between these tasks, they highly depend on the sensors used by the robot and on the type of the environment in which the robot operates. For example, an RGB camera can be used in an outdoor scene for computing visual odometry, or to detect dynamic objects but becomes less useful in an environment that does not have enough light for cameras to operate. The software that controls the behavior of the robot must seamlessly process all the data coming from different sensors. This often leads to systems that are tailored to a particular robot and a particular set of sensors. In this thesis, we challenge this concept by developing and implementing methods for a typical robot navigation pipeline that can work with different types of sensors seamlessly both, in indoor and outdoor environments. With the emergence of new range-sensing RGBD and LiDAR sensors, there is an opportunity to build a single system that can operate robustly both in indoor and outdoor environments equally well and, thus, extends the application areas of mobile robots.

The techniques presented in this thesis aim to be used with both RGBD and LiDAR sensors without adaptations for individual sensor models by using range image representation and aim to provide methods for navigation and scene interpretation in both static and dynamic environments. For a static world, we present a number of approaches that address the core components of a typical robot navigation pipeline. At the core of building a consistent map of the environment using a mobile robot lies point cloud matching. To this end, we present a method for photometric point cloud matching that treats RGBD and LiDAR sensors in a uniform fashion and is able to accurately register point clouds at the frame rate of the sensor. This method serves as a building block for the further mapping pipeline. In addition to the matching algorithm, we present a method for traversability analysis of the currently observed terrain in order to guide an autonomous robot to the safe parts of the surrounding environment. A source of danger when navigating di cult to access sites is the fact that the robot may fail in building a correct map of the environment. This dramatically impacts the ability of an autonomous robot to navigate towards its goal in a robust way, thus, it is important for the robot to be able to detect these situations and in this way home not relying on any kind of map. To address this challenge, we present a method for analyzing the quality of the map that the robot has built to date, and safely returning the robot to the starting point in case the map is found to be in an inconsistent state.

The scenes in dynamic environments are vastly different from the ones experienced in static ones. In a dynamic setting, objects can be moving, thus making static traversability estimates not enough. With the approaches developed in this thesis, we aim at identifying distinct objects and tracking them to aid navigation and scene understanding. We target these challenges by providing a method for clustering a scene taken with a LiDAR scanner and a measure that can be used to determine if two clustered objects are similar that can aid the tracking performance.

All methods presented in this thesis are capable of supporting real-time robot operation, rely on RGBD or LiDAR sensors and have been tested on real robots in real-world environments and on real-world datasets. All approaches have been published in peer-reviewed conference papers and journal articles. In addition to that, most of the presented contributions have been released publicly as open source software.

2018-10: Olga Vysotska at the Grace Hopper Conference

Olga Vysotska at the Grace Hopper Conference 2018 (GHC) by invitation through Google travel grant. GHC is the biggest gathering of the women in computing, this year around 25 000 people were attending this event in Houston, TX, https://ghc.anitab.org.